![R] You can't train GPT-3 on a single GPU, but you *can* tune its hyperparameters on one : r/MachineLearning R] You can't train GPT-3 on a single GPU, but you *can* tune its hyperparameters on one : r/MachineLearning](https://preview.redd.it/dqna8guklkm81.png?width=1838&format=png&auto=webp&s=2f7ba582a1cc949461dac8601a896034eaf0ff84)

R] You can't train GPT-3 on a single GPU, but you *can* tune its hyperparameters on one : r/MachineLearning

Dylan Patel on Twitter: "They literally are able to train GPT-3 with FP8 instead of FP16 with effectively no loss in accuracy. It's just nuts! https://t.co/H4Lr9yuP3h" / Twitter

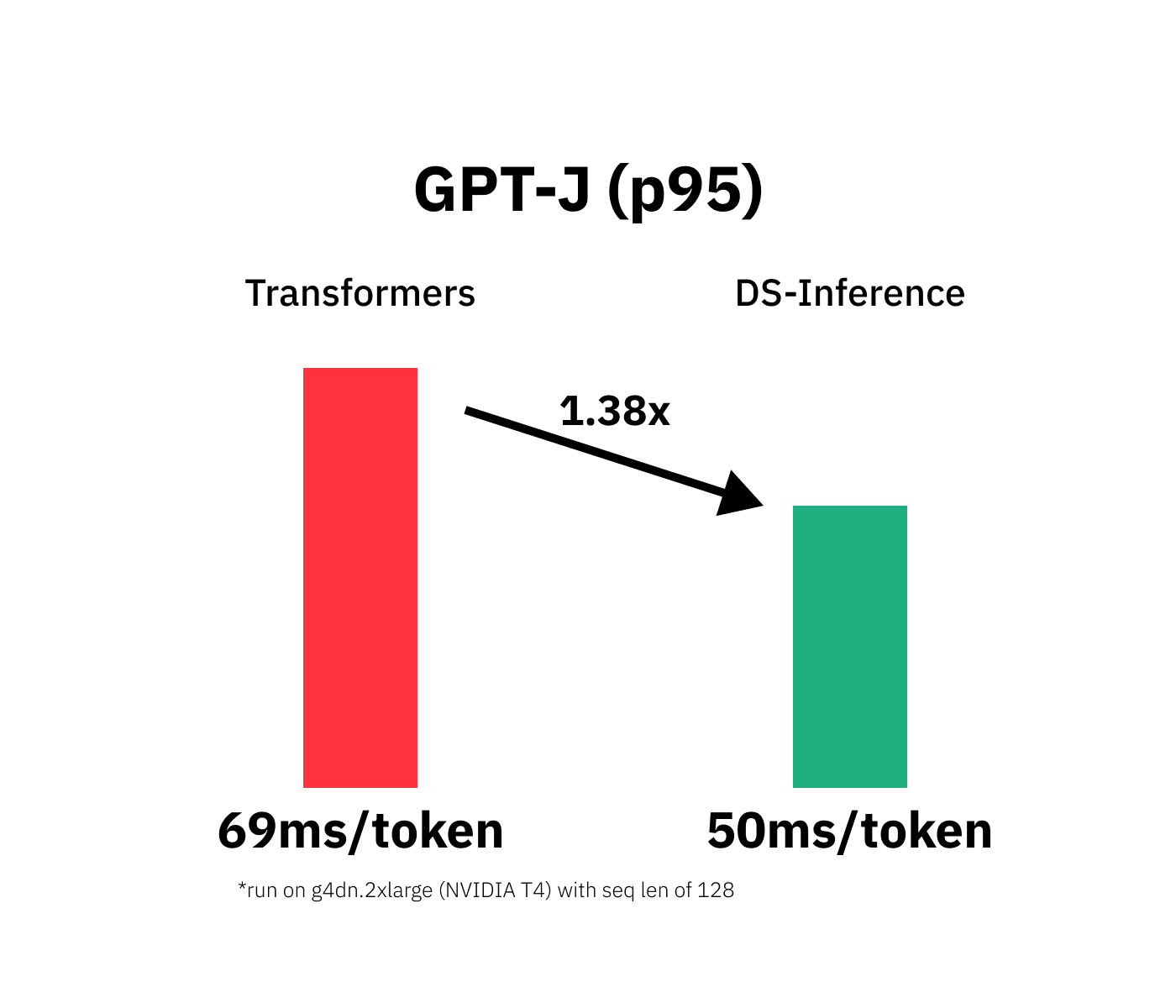

Surpassing NVIDIA FasterTransformer's Inference Performance by 50%, Open Source Project Powers into the Future of Large Models Industrialization

How many days did it take to train GPT-3? Is training a neural net model a parallelizable task? : r/GPT3

On the malicious use of large language models like GPT-3 | NCC Group Research Blog | Making the world safer and more secure